- Published on

Enhancing LLMs Responses through Prompt Engineering

- Authors

- Name

- Astik Dahal

Have you ever asked an AI a simple question and gotten a strangely off-topic or unhelpful answer? You’re not alone. Large Language Models (LLMs) have become incredible tools for coding, writing, and brainstorming, but sometimes they still go off the rails. The secret ingredient? How we prompt them.

Below, we’ll explore how to craft prompts that produce more accurate, coherent, and creative outputs. Whether you’re building apps with AI or just messing around with chatbots, these tips can help you get better results, faster.

Why Prompt Engineering Matters

LLMs like GPT-4 can do all kinds of amazing things—draft emails, write poetry, answer research questions—yet they rely on one main input: your prompt. Think of the prompt like instructions you give to a new teammate. If you’re direct and specific, you’ll get the outcome you want. If you’re vague, you risk confusion and wasted time.

Key Benefits of Good Prompting

- Saves Time: If your prompt is clear, you won’t need endless revisions.

- Improves Accuracy: A model that understands exactly what you want is less likely to hallucinate or make stuff up.

- Increases Creativity: The best prompts can push a model’s creativity further.

Simple vs. Advanced Prompting

Zero-Shot Prompting

Zero-shot prompting is asking a question without any examples or extra training. For instance:

What’s the capital of France?

The model answers using its pre-loaded knowledge. This works well for straightforward questions but can fail when you need nuanced or step-by-step reasoning.

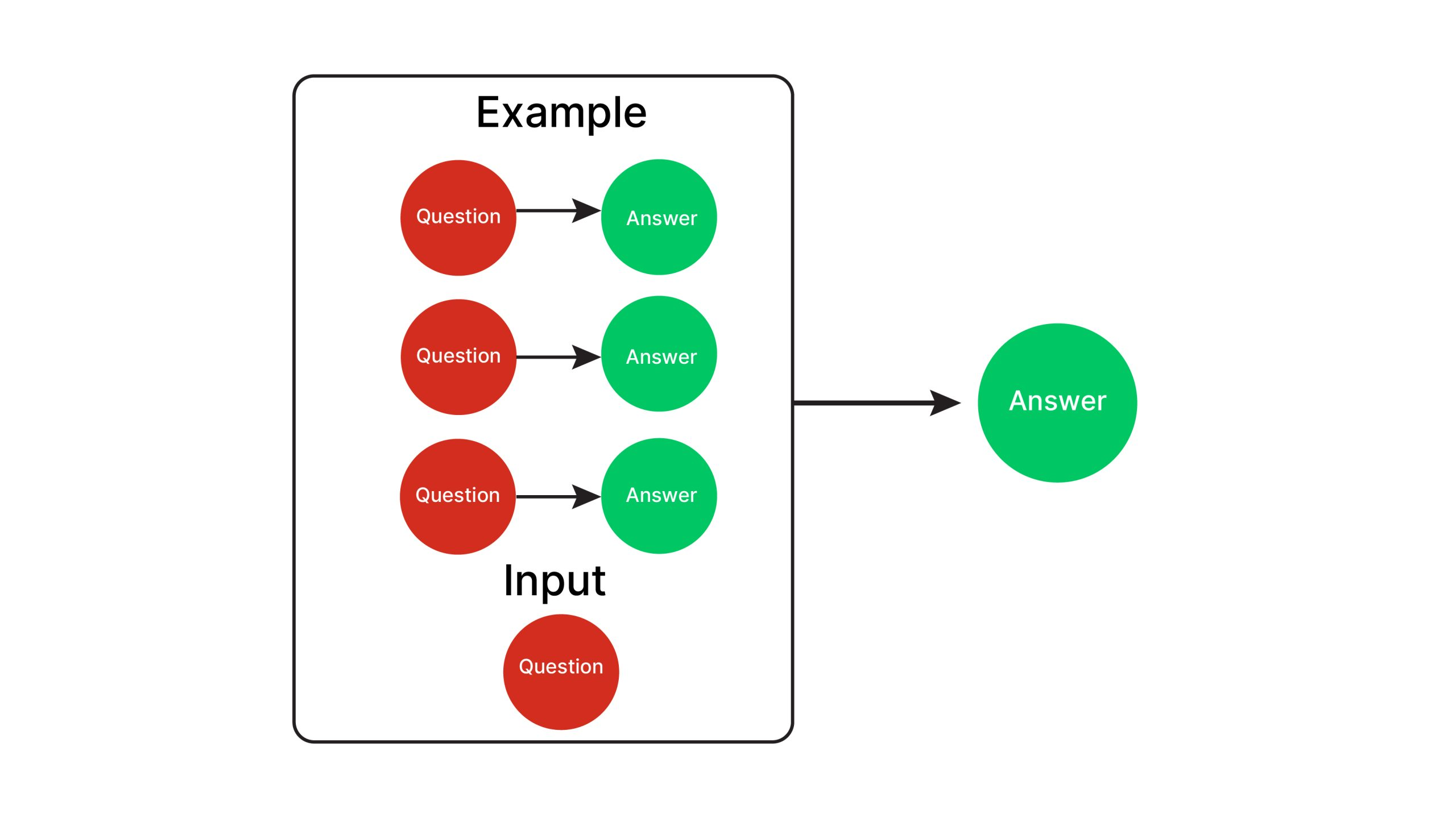

Few-Shot Prompting

Few-shot prompting means giving the model a couple of examples before you ask your actual question. It’s like showing your friend how you solved two math problems before asking them to solve a similar one:

Q: What is the capital of France?

A: Paris

Q: What is the largest planet?

A: Jupiter

Q: Who painted the Mona Lisa?

A: Leonardo da Vinci

By seeing your examples, the model is more likely to match your style and accuracy.

Next-Level Techniques

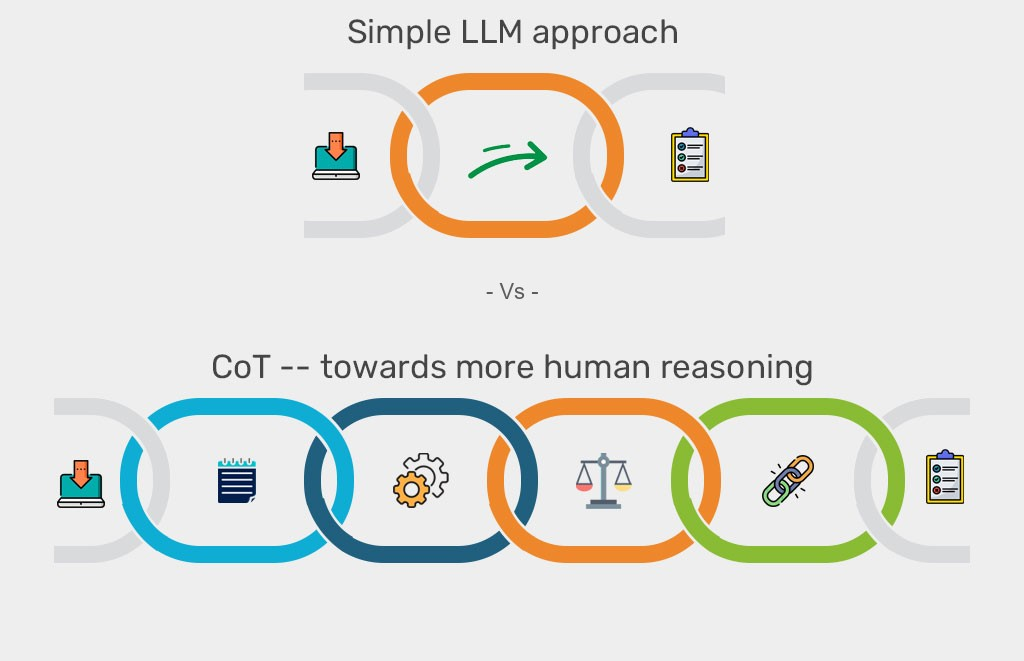

Chain-of-Thought Reasoning

Sometimes you want a model to show its work for tough questions. In that case, you can prompt it with “Explain your steps” or “Walk me through the reasoning.” This approach is called chain-of-thought prompting and is super useful for math, logic puzzles, or detailed analysis.

- Pro: More transparent and detailed responses.

- Con: It can be longer, and sometimes, the model still makes mistakes (but now you can see them clearly).

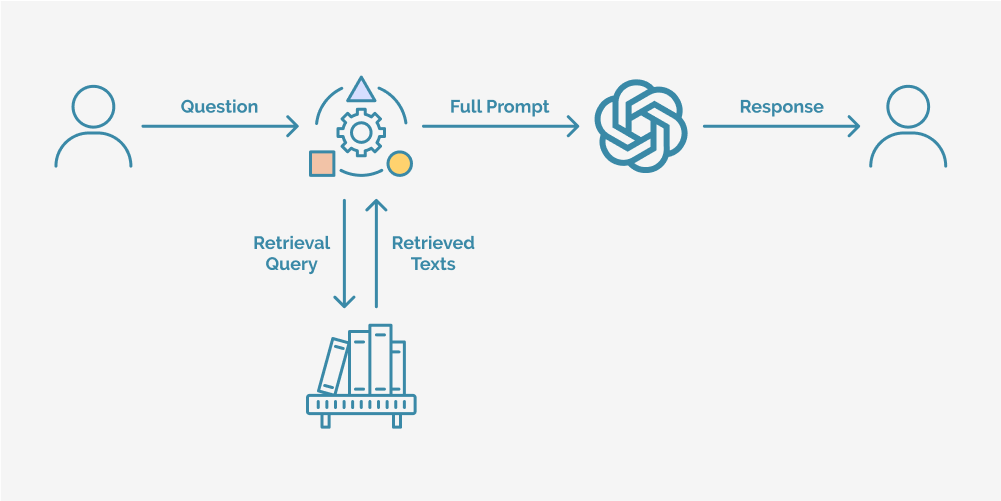

Retrieval-Augmented Generation

LLMs might be outdated for questions that demand fresh, factual information—like “What happened in the news yesterday?” but a retrieval system can help. It searches a knowledge base or the Internet, grabs relevant snippets, and then hands them to the AI to produce a well-informed answer.

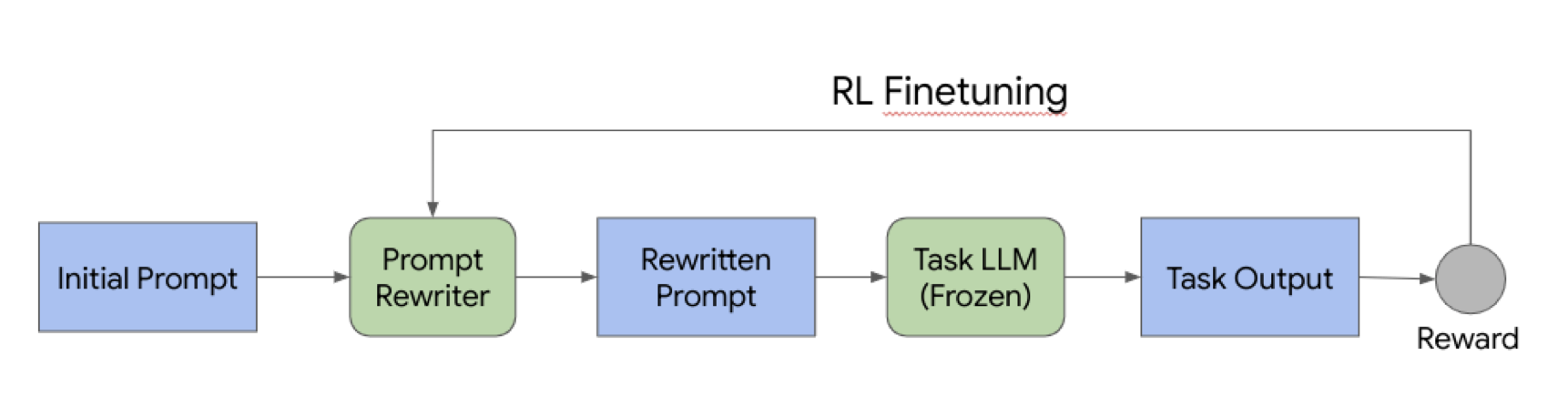

Reinforcement Learning for Prompt Rewriting

In some experimental setups, an AI can try rewriting your prompt, sending it to another AI, and then seeing if the answer improves. Over time, the “prompt rewriter” learns how to phrase things better.

- Why it’s cool: It can optimize prompts automatically.

- Why it’s tricky: It’s complicated, and you often still need a human in the loop to judge quality.

Prompt Tuning & Parameter-Efficient Fine-Tuning

Instead of retraining the entire giant model, you can tweak only a small portion, like adding special “prompt” layers. You keep most of the models frozen, so it’s cheaper and faster. This method is perfect if you have a specific domain (like legal text or medical data) and want the model to be extra knowledgeable there.

Role-Playing Prompts

If you need text in a specific style or from a particular viewpoint, ask the model to play a role. Maybe you want it to act like a Shakespearean poet, a supportive English tutor, or a 1920s detective. This often leads to fun and unique responses, like having a teacher who corrects your grammar step by step—or a poet who pens verses in old English.

Tips for Better Prompts

Be Specific

If you want Python code, say so. If you need a short bullet list, mention the desired format. The fewer assumptions the model has to make, the better.

Iterate, Don’t Aggravate

It’s okay to refine your question if you don’t get what you want the first time. Tailor your instructions based on the model’s initial response.

Match Prompt Complexity to Task Complexity

Over-engineering a basic question is a waste of time. Simple question? Simple prompt.

Reduce “Hallucinations”

If the model lacks enough information, prompt it to say “I’m not sure.” If possible, use a retrieval strategy to feed it updated facts.

Use Self-Consistency

Ask the model to produce multiple answers, then pick the best or most coherent one.

Mind the Context Window

Each LLM has a limit on the number of words/tokens it can handle at once. If your conversation gets too long or detailed, keep that in mind.

Where Prompt Engineering Is Headed

- Automated Prompt Optimization: Tools that automatically find the best prompt for a given job.

- Multimodal Inputs: Using prompts with images, audio, or even videos.

- Personalized AI Assistants: Prompts tailored to your personal style, knowledge, or preferences.

- Continual Learning: Over time, prompts and models learn from each exchange to stay accurate and relevant.

Wrapping Up

Prompt engineering is rapidly evolving alongside the AI models themselves. Every time you interact with an LLM—whether you’re coding, writing a blog post, or just curious—you have a chance to experiment with different prompt strategies. By mastering these techniques, you’ll get better results, cut down on random or incorrect answers, and unlock the true power of AI in your everyday tasks.

In short, how you phrase your request matters. Give it a try: craft a thoughtful prompt, see what happens, and don’t be afraid to refine it until the answer looks right. You might be surprised at just how much of a difference good prompt engineering can make.