- Published on

Depth-Aware Background Blur for Enhanced Portrait Photography

- Authors

- Name

- Astik Dahal

This blog post details a method I used for achieving a portrait-style image effect by selectively blurring the background based on depth information. I leverage the MiDaS model for robust depth estimation and combine it with image processing techniques for background manipulation. This approach allows for the automated creation of images with emphasized subjects, a technique commonly used in professional photography and readily available in modern smartphones.

Portrait Mode Effect

The "portrait mode" effect, characterized by a sharp subject against a blurred background, enhances visual appeal by drawing attention to the foreground. This technique traditionally requires specialized lenses and manual adjustments. However, with advancements in deep learning, depth estimation from a single image has become feasible, enabling automated background blurring. I utilize the MiDaS (Multi-Inference Depth Aggregation System) model, a powerful deep-learning architecture for monocular depth estimation.

My approach consists of two main stages: depth estimation and background blurring.

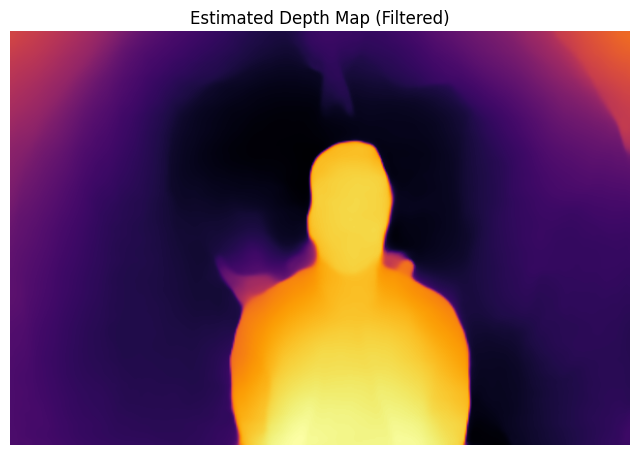

Depth Estimation using MiDaS

The MiDaS model infers a depth map from the input image, where each pixel value represents the estimated distance from the camera. I employ the DPT_Large model, known for its robustness. The following code snippet demonstrates the depth estimation process:

Python

def depth_estimation(img, model_type="DPT_Large"):

model = torch.hub.load("intel-isl/MiDaS", model_type)

model.eval()

midas_transforms = torch.hub.load("intel-isl/MiDaS", "transforms")

transform = midas_transforms.default_transform

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model.to(device)

input_batch = transform(img).to(device)

with torch.no_grad():

prediction = model(input_batch)

prediction = torch.nn.functional.interpolate(

prediction.unsqueeze(1), size=img.shape[:2], mode="bilinear", align_corners=False

).squeeze()

depth_map = prediction.cpu().numpy()

depth_map_filtered = cv2.medianBlur(depth_map.astype(np.float32), ksize=5) # Noise reduction

return depth_map_filtered

The depth_estimation function loads the pre-trained MiDaS model, applies necessary transformations to the input image, performs inference, and post-processes the depth map using a median filter for noise reduction.

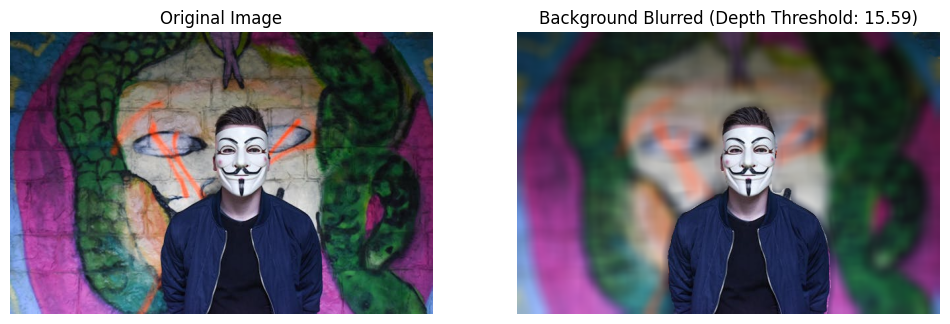

Background Blurring

Using the estimated depth map, I create a mask to distinguish between the foreground (subject) and background. Pixels beyond a certain depth threshold are considered background and are blurred using a box blur.

def blur_background(img, depth_map, blur_ksize=21, depth_threshold=None):

img_bgr = cv2.cvtColor(img, cv2.COLOR_RGB2BGR)

d_min, d_max = depth_map.min(), depth_map.max()

if depth_threshold is None:

depth_threshold = d_min + 0.6 * (d_max - d_min)

background_mask = (depth_map < depth_threshold).astype(np.uint8)

kernel = np.ones((5,5), np.uint8)

background_mask = cv2.morphologyEx(background_mask, cv2.MORPH_OPEN, kernel)

background_mask = cv2.morphologyEx(background_mask, cv2.MORPH_CLOSE, kernel)

foreground_mask = 1 - background_mask

blurred_img_bgr = cv2.blur(img_bgr, (blur_ksize, blur_ksize))

result_bgr = np.where(foreground_mask[..., None] == 1, img_bgr, blurred_img_bgr) # Combining foreground and blurred background

result_rgb = cv2.cvtColor(result_bgr, cv2.COLOR_BGR2RGB)

return result_rgb

blurred_image = blur_background(img, depth_map)

The blur_background function converts the image to BGR (required by OpenCV's blur function), determines a depth threshold (either user-defined or a default value based on the depth map's range), creates a mask, applies morphological operations (opening and closing) to refine the mask and reduce noise, blurs the background, and combines the blurred background with the original foreground.

Results & Conclusion

The combination of MiDaS depth estimation and selective blurring effectively creates the portrait mode effect. The accuracy of the depth map directly influences the quality of the blur. The DPT_Large model generally provides good results, but challenges may arise with complex scenes or objects with similar depths. Morphological operations on the mask help to refine the foreground/background separation and reduce artifacts.

The MiDaS model significantly simplifies the process by providing robust depth estimation.

Future work could explore optimizing the blurring algorithm, incorporating user interaction for fine-tuning the blur effect, and investigating alternative depth estimation models.